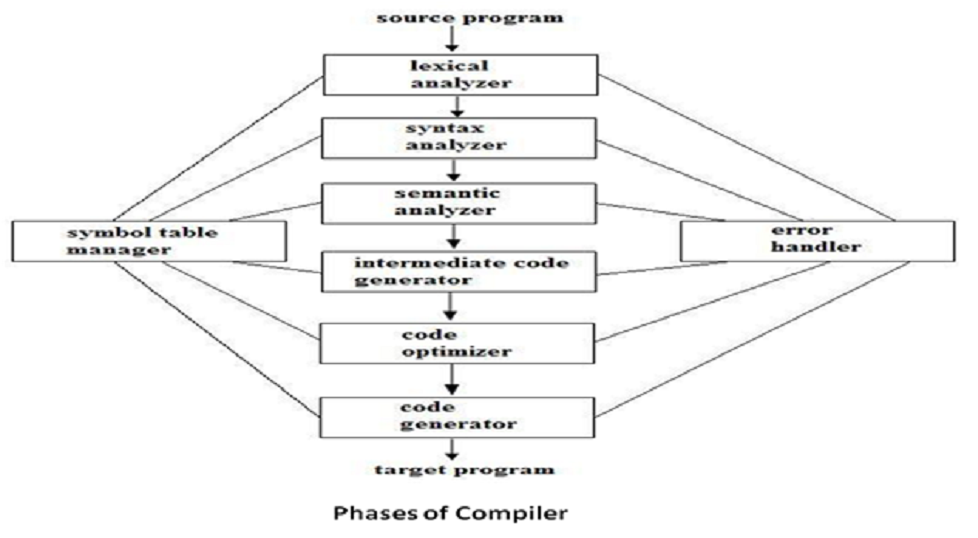

Phases of Compiler:

Compiler operates in phases.

• Each phase transforms the source program from one representation to another.

• Six phases:

– Lexical Analyser

– Syntax Analyser

– Semantic Analyser

– Intermediate code generation

– Code optimization

– Code Generation

• Symbol table and error handling interact with the six phases.

• Some of the phases may be grouped together.

These phases are illustrated by considering the following statement:

position := initial + rate * 60

Symbol Table Management:

– Symbol table is a data structure containing a record for each identifier, with fields for the attributes of the identifier.

– Record the identifier used in the source program and collect information about the identifier such as,

• its type, (by semantic and intermediate code)

• its scope, (by semantic and intermediate code)

• storage allocation, (by code generation)

• number of arguments and its type for procedure, the type returned

• Error Detecting and Reporting:

– Each phase encounters errors.

– Lexical phase determine the input that do not form token.

– Syntax phase determine the token that violates the syntax rule.

– Semantic phase detects the constructs that have no meaning to operand.

• The Analysis Phase:

– Involves: Lexical, Syntax and Semantic phase

– As translation progresses, the compiler’s internal representation of the source program changes.

• Lexical Analysis Phase:

– The lexical phase reads the characters in the source program and groups them into a stream of tokens in which each token represents a logically cohesive sequence of characters, such as, An identifier, A keyword, A punctuation character.

– The character sequence forming a token is called the lexeme for the token.

• Syntax Analysis Phase:

– Syntax analysis imposes a hierarchical structure on the token stream. This hierarchical structure is called syntax tree.

– A syntax tree has an interior node is a record with a field for the operator and two fields containing pointers to the records for the left and right children.

– A leaf is a record with two or more fields, one to identify the token at the leaf, and the other to record information about the token.

• Semantic Analysis Phase:

– This phase checks the source program for semantic errors and gathers type information for the subsequent code-generation phase.

– It uses the hierarchical structure determined by the syntax-analysis phase to identify the operators and operands of expressions and statements.

– An important component of semantic analysis is type checking.

• Intermediate Code Generation:

– The syntax and semantic analysis generate a explicit intermediate representation of the source program.

– The intermediate representation should have two important properties:

• It should be easy to produce,

• And easy to translate into target program.

– Intermediate representation can have a variety of forms.

– One of the forms is: three address code; which is like the assembly language for a machine in which every location can act like a register.

– Three address code consists of a sequence of instructions, each of which has at most three operands.

• Code Optimization:

– Code optimization phase attempts to improve the intermediate code, so that faster-running machine code will result.

• Code Generation:

– The final phase of the compiler is the generation of target code, consisting normally of relocatable machine code or assembly code.

– Memory locations are selected for each of the variables used by the program.

– Then, the each intermediate instruction is translated into a sequence of machine instructions that perform the same task.

Token: Token is a sequence of characters that can be treated as a single logical entity. Typical tokens are, 1) Identifiers 2) keywords 3) operators 4) special symbols 5) constants

Pattern: A set of strings in the input for which the same token is produced as output. This set of strings is described by a rule called a pattern associated with the token.

Lexeme: A lexeme is a sequence of characters in the source program that is matched by the pattern for a token.

|

Example: Token |

lexeme |

pattern |

|

const |

const |

const |

|

if |

if |

if |

|

relation |

<,<=,= ,< >,>=,> |

< or <= or = or < > or >= or letter followed by letters & digit |

|

i |

pi |

any numeric constant |

|

nun |

3.14 |

any character b/w “and “except" |

|

literal |

"core" |

pattern |

A pattern is a rule describing the set of lexemes that can represent a particular token in source program.